17 Geospatial data analysis

In this section we will provide some realistic data analyses of geographic data including Geospatial Positionning System (GPS) and geolocation data, which is then combined with phenotypic data. The objectives of the analyses are intended to mimic the real-life usage of data as described in Burgoine et al.

17.1 Introducing the analysis

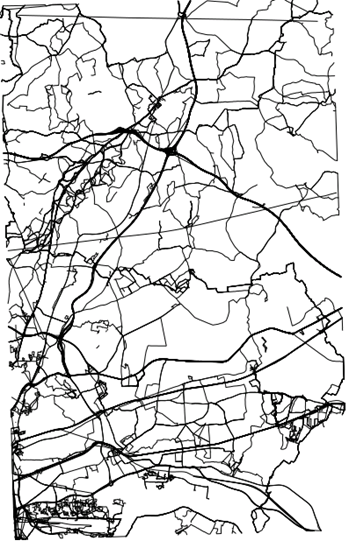

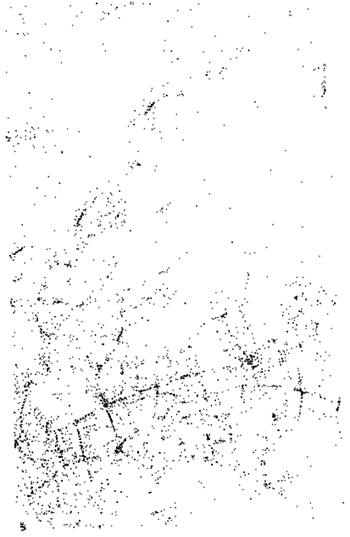

In this example we consider GPS traces captured by individuals in some eastern districts of London, publicly available from OpenStreetMap. For this example analysis, we will imagine these 819 GPS traces as commutes between individuals’ home and work. If these data were actual commutes, they would be highly sensitive as they identify an individual’s home and work location. Therefore it is less likely that commuting data would be readily accessible for traditional pooled analysis and a federated approach with DataSHIELD could be more practical. We also have real data on the location of 6100 fast food or takeaway outlets in this same area, sourced from the Food Standards Agency. This is shown in the figure below. We manufactured a corresponding data set on individuals’ BMI, age, sex, total household income, highest educational qualification (as proxies for individual level socioeconomic status) and smoking status. The purpose of the analysis is to test the association between exposure to takeaway food on a commute with BMI, using the other variables to adjust for confounding factors. Given that reducing obesity is a public health priority, this type of research could help inform local authority policies towards food outlet location, type and density. We illustrate how the tools available in the dsGeo package allow this question to be addressed.

Figure 17.1: GPS traces in eastern London which could be imagined to be commutes between homes and workplaces

Figure 17.2: Location of food outlets in eastern London

17.2 Setting up the analysis

Note that in this example, the data are held in a single resource. There is scope for the data to be held in different resources.

In order to perform geospatial data analyses, we need first to login and assign resources to DataSHIELD. This can be performed using the as.resource.object() function. The data have been uploaded to the server and configured as resources.

builder <- DSI::newDSLoginBuilder()

builder$append(server = "study1", url = "https://opal-demo.obiba.org",

user = "dsuser", password = "password",

resource = "RSRC.gps_data", driver = "OpalDriver")

logindata <- builder$build()

conns <- DSI::datashield.login(logins = logindata, assign = TRUE,

symbol = "gps.res")

# Assign additional datasets as resources for the location of the food outlets / takeaways and

# the participant phenotype data

datashield.assign.resource(conns, symbol="takeaway.res", resource="RSRC.takeaway_gps")

datashield.assign.resource(conns, symbol="participant.res", resource="RSRC.gps_participant")

# Assign to the original R classes (e.g Spatial)

datashield.assign.expr(conns, symbol = "takeaway",

expr = quote(as.resource.object(takeaway.res)))

datashield.assign.expr(conns, symbol = "journeys",

expr = quote(as.resource.object(gps.res)))

datashield.assign.expr(conns, symbol = "participant",

expr = quote(as.resource.data.frame(participant.res)))Now, we can see that the resources are loaded into the R servers as their original class

$study1

[1] "SpatialPointsDataFrame"

attr(,"package")

[1] "sp"$study1

[1] "SpatialLinesDataFrame"

attr(,"package")

[1] "sp"$study1

[1] "data.frame"In the same way that BioConductor provides convenient data structures for ’omics data, the sp package allows each row of data to have a corresponding set of geometries. So if a row of data has information about an individual (age, BMI, etc.) it can also have a set of many points defining geometries such as points, lines and polygons.

# Standard ds.summary can be used on the 'data' part of the spatial dataframe

ds.summary('journeys$age')$study1

$study1$class

[1] "numeric"

$study1$length

[1] 819

$study1$`quantiles & mean`

5% 10% 25% 50% 75% 90% 95% Mean

41.23623 42.76502 46.61040 52.53669 58.73395 62.25656 63.72577 52.58792 We have provided an additional function to allow a summary of the geometries to be provided.

$study1

$class

[1] "SpatialLinesDataFrame"

attr(,"package")

[1] "sp"

$bbox

min max

x 538171.2 556078.4

y 179796.4 208063.6

$is.projected

[1] TRUE

$proj4string

[1] "+init=epsg:27700 +proj=tmerc +lat_0=49 +lon_0=-2 +k=0.9996012717 +x_0=400000 +y_0=-100000 +datum=OSGB36 +units=m +no_defs +ellps=airy +towgs84=446.448,-125.157,542.060,0.1502,0.2470,0.8421,-20.4894"

$data

id age sex inc fsmoke fedu

Min. : 1.0 Min. :40.02 Min. :0.0000 Min. : 2479 current:240 Secondary:156

1st Qu.:218.5 1st Qu.:46.61 1st Qu.:0.0000 1st Qu.:40545 former :169 Higher :393

Median :426.0 Median :52.54 Median :0.0000 Median :50949 never :410 Advanced :270

Mean :427.6 Mean :52.59 Mean :0.4872 Mean :50395

3rd Qu.:640.5 3rd Qu.:58.73 3rd Qu.:1.0000 3rd Qu.:59912

Max. :849.0 Max. :64.99 Max. :1.0000 Max. :99301

BMI

Min. : 17.01

1st Qu.: 22.42

Median : 24.34

Mean : 25.13

3rd Qu.: 26.60

Max. :116.72

attr(,"class")

[1] "summary.Spatial"17.3 Data manipulation

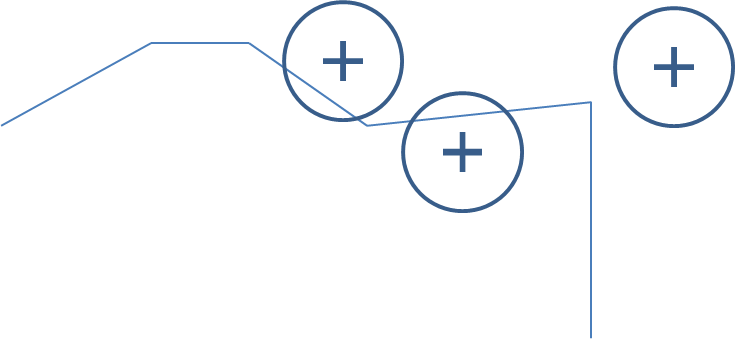

In our analysis we need to define how we determine whether an individual has been ‘exposed’ to a food outlet. First we will define a 10m buffer around the location of the food outlet. If an individual’s GPS trace falls within that buffered region, we will say that they are ‘exposed’ to that outlet. The food outlets are defined as points, and after the buffer is applied they become polygons.

# Add a buffer to each takeaway - now they are polygons

ds.gBuffer(input = 'takeaway', ip_width=10, newobj.name = 'take.buffer', by_id=TRUE)

ds.geoSummary('take.buffer')$study1

$class

[1] "SpatialPolygonsDataFrame"

attr(,"package")

[1] "sp"

$bbox

min max

x 538336.1 556245.1

y 179810.1 207683.1

$is.projected

[1] TRUE

$proj4string

[1] "+init=epsg:27700 +proj=tmerc +lat_0=49 +lon_0=-2 +k=0.9996012717 +x_0=400000 +y_0=-100000 +datum=OSGB36 +units=m +no_defs +ellps=airy +towgs84=446.448,-125.157,542.060,0.1502,0.2470,0.8421,-20.4894"

$data

BusinessName BusinessTypeID

Tesco : 43 Min. : 1

Subway : 32 1st Qu.: 1

Costa Coffee : 24 Median :4613

Sainsbury's : 22 Mean :4210

Londis : 19 3rd Qu.:7843

Domino's Pizza: 17 Max. :7846

(Other) :5943

attr(,"class")

[1] "summary.Spatial"To simplify the next step, we remove the data part of the SpatialPolygonsDataFrame to leave the polygons

#extract the polygons

ds.geometry(input_x = 'take.buffer', newobj.name = 'take.buffer.strip')

ds.geoSummary('take.buffer.strip')$study1

$class

[1] "SpatialPolygons"

attr(,"package")

[1] "sp"

$bbox

min max

x 538336.1 556245.1

y 179810.1 207683.1

$is.projected

[1] TRUE

$proj4string

[1] "+init=epsg:27700 +proj=tmerc +lat_0=49 +lon_0=-2 +k=0.9996012717 +x_0=400000 +y_0=-100000 +datum=OSGB36 +units=m +no_defs +ellps=airy +towgs84=446.448,-125.157,542.060,0.1502,0.2470,0.8421,-20.4894"

attr(,"class")

[1] "summary.Spatial"Now, we take the intersection of each individual’s commute with each food outlet as shown in the figure below.

Figure 17.3: Food outlets with a buffer are shown by a cross surrounded by a circle. The line segments represent the GPS trace. In this case there is an intersection with 2 of the 3 food outlets.

This results in a list of individuals, with each list element being a numeric vector containing the ids of the food outlet buffers that were intersected by the GPS trace. These are the food outlets that the individual was ‘exposed’ to. We then convert the numeric vectors to counts by applying the length function to the list.

# Do the intersection of journeys with buffered takeaways

ds.over(input_x = 'journeys', input_y = 'take.buffer.strip',

newobj.name = 'my.over', retList = TRUE)

ds.lapply(input = 'my.over',newobj.name = 'count.list', fun = 'length')The result of the lapply needs to be unlisted, and we check the result looks reasonable

$is.object.created

[1] "A data object <counts> has been created in all specified data sources"

$validity.check

[1] "<counts> appears valid in all sources"$study1

$study1$class

[1] "integer"

$study1$length

[1] 819

$study1$`quantiles & mean`

5% 10% 25% 50% 75% 90% 95% Mean

0.000000 0.000000 0.000000 0.000000 1.000000 5.000000 9.000000 1.882784 17.4 Generating the final result

Finally we complete the association analysis and see that BMI is positively associated with the count of food outlets that an individual is exposed to on commutes (see the ‘counts’ coefficient). This type of analysis could be used to convey a public health message about density and location of takeaway food outlets.

$is.object.created

[1] "A data object <geo.df> has been created in all specified data sources"

$validity.check

[1] "<geo.df> appears valid in all sources"result <- ds.glm(formula = 'BMI ~ age + sex + inc + fsmoke + fedu + counts',

data = 'geo.df', family = 'gaussian')

result$coefficients Estimate Std. Error z-value p-value low0.95CI high0.95CI

(Intercept) 2.379500e+01 6.334955e-01 37.5614341 0.000000e+00 2.255337e+01 2.503663e+01

age 2.698037e-02 1.023046e-02 2.6372581 8.357921e-03 6.929033e-03 4.703171e-02

sex -2.960308e+00 1.447462e-01 -20.4517233 5.799002e-93 -3.244006e+00 -2.676611e+00

inc -7.647467e-06 5.202464e-06 -1.4699701 1.415699e-01 -1.784411e-05 2.549176e-06

fsmokeformer 2.849097e-01 2.070037e-01 1.3763509 1.687130e-01 -1.208101e-01 6.906295e-01

fsmokenever 3.906555e-01 1.675560e-01 2.3314925 1.972740e-02 6.225183e-02 7.190592e-01

feduHigher 8.727664e-02 1.951124e-01 0.4473147 6.546479e-01 -2.951367e-01 4.696899e-01

feduAdvanced -1.417767e-01 2.076311e-01 -0.6828300 4.947143e-01 -5.487262e-01 2.651727e-01

counts 7.957629e-01 1.137431e-02 69.9614332 0.000000e+00 7.734697e-01 8.180562e-01This closes the access to the resources